This article explores the key differences between supervised and unsupervised learning, highlighting their definitions, techniques, advantages, and limitations. It emphasizes the importance of labeled data in supervised learning and the role of unlabeled data in unsupervised learning, providing a comprehensive understanding for readers.

Definition of Supervised Learning

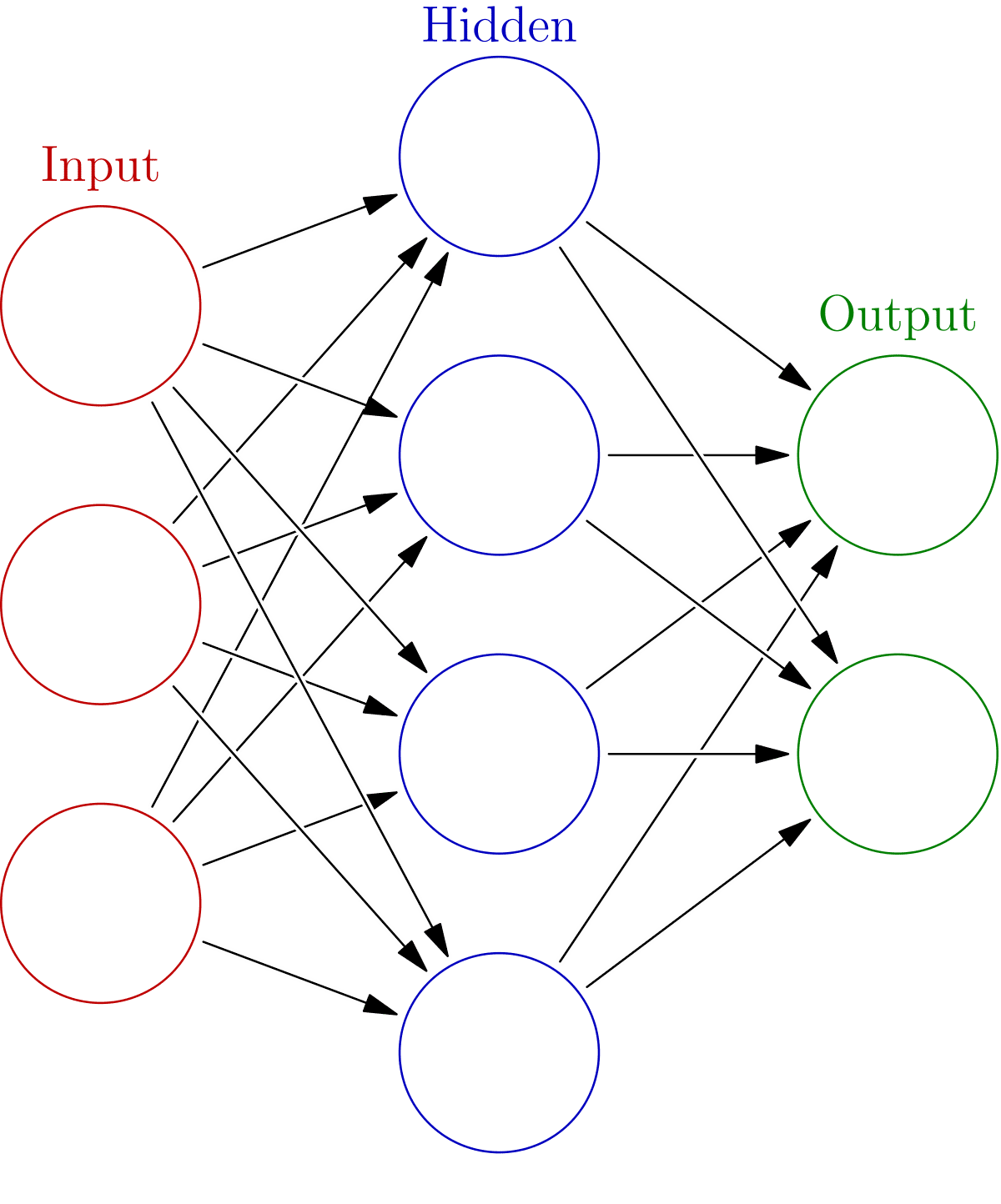

Supervised learning is a type of machine learning where algorithms learn from labeled data. The key concept here is that the model is trained using a dataset that includes both input features and the corresponding output labels. Essentially, the algorithm learns to map inputs to outputs based on the provided examples. This process enables the model to make predictions on new, unseen data by recognizing patterns it learned during training.

Some core principles of supervised learning include:

- Labeled Data: The presence of labeled data is crucial as it serves as the foundation for training the algorithm. Each input is paired with the correct output.

- Prediction: The primary goal is to predict outcomes for new data based on the learned relationships.

- Feedback Loop: Supervised learning models can be refined through iterative feedback, improving their accuracy over time.

In summary, supervised learning is all about teaching models through examples. This method is widely used in applications such as spam detection, image recognition, and predictive analytics.

Definition of Unsupervised Learning

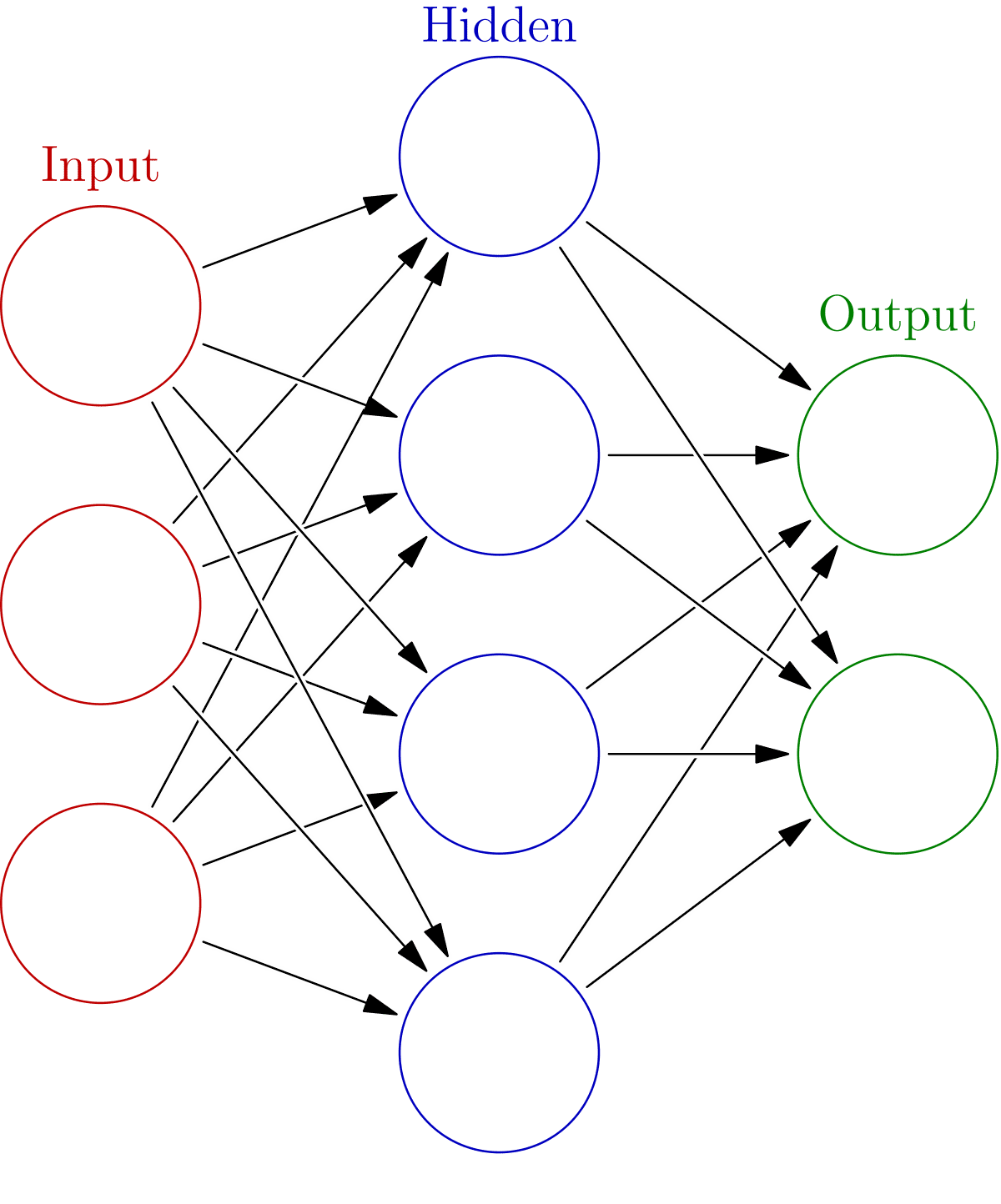

Unsupervised learning, on the other hand, deals with data that is not labeled. Here, the algorithms attempt to find hidden patterns or intrinsic structures within the data without any prior guidance. This approach is particularly useful for discovering relationships in data sets where labels are not available.

Key features of unsupervised learning include:

- Unlabeled Data: Unlike supervised learning, there are no output labels; the model analyzes the input data to identify patterns.

- Clustering: A common technique where the algorithm groups similar data points together based on shared characteristics.

- Dimensionality Reduction: Techniques that simplify data by reducing the number of variables while retaining essential information.

In essence, unsupervised learning helps in understanding the data’s underlying structure. It’s commonly applied in customer segmentation, anomaly detection, and market basket analysis.

Supervised Learning Techniques

Several techniques are employed in supervised learning to build predictive models. Here are a few notable examples:

- Linear Regression: This technique predicts a continuous outcome based on the linear relationship between input features and the target variable.

- Decision Trees: A flowchart-like structure where decisions are made based on the input features, leading to predictions.

- Support Vector Machines (SVM): This method identifies the best boundary that separates different classes in the data.

- Random Forest: An ensemble technique that utilizes multiple decision trees to improve accuracy and robustness.

These techniques enable businesses to make informed decisions based on data analysis, enhancing predictive accuracy across various applications such as finance, healthcare, and marketing.

Unsupervised Learning Techniques

Unsupervised learning techniques focus on analyzing and interpreting data that lacks labels. This type of learning enables algorithms to identify patterns, group similar data points, and draw conclusions without pre-existing categories. Here are some prominent techniques:

- Clustering: This technique involves grouping data points based on their similarities. For example, customer segmentation uses clustering to identify different groups of customers based on purchasing behavior.

- Dimensionality Reduction: Methods like Principal Component Analysis (PCA) reduce the number of features in a dataset while retaining essential information. This helps simplify data visualization and analysis.

- Association Rule Learning: This technique uncovers interesting relationships between variables in large databases. A common application is market basket analysis, where businesses identify products frequently purchased together.

- Anomaly Detection: This technique finds rare items or events in data. For instance, it is used in fraud detection systems to identify unusual transactions.

These techniques are essential for extracting insights from large datasets and discovering hidden structures in data.

Relationship between Supervised and Unsupervised Learning

The relationship between supervised and unsupervised learning is complementary. While supervised learning requires labeled data to make predictions, unsupervised learning excels at exploring unlabeled data. Together, they provide a comprehensive approach to data analysis:

- Sequential Learning: Unsupervised learning can be used to preprocess data, identifying patterns that can inform supervised models. For example, clustering might reveal natural groupings that help label data for supervised learning.

- Data Enrichment: Insights gained from unsupervised techniques can enhance supervised learning models. By understanding the underlying structures, models can be trained more effectively.

- Iterative Improvement: Results from supervised learning can guide further exploration in unsupervised learning, creating a feedback loop that improves overall model performance.

In conclusion, both learning types play crucial roles in data science, facilitating a richer understanding of data.

Advantages of Supervised Learning

Supervised learning offers several advantages that make it a popular choice for predictive modeling:

- High Accuracy: Because supervised learning relies on labeled data, models can achieve high accuracy in predictions. This is particularly beneficial in critical applications such as medical diagnosis.

- Clear Objectives: The presence of labeled data provides clear objectives for the model, enabling focused training. This clarity leads to more reliable outcomes.

- Predictive Power: Supervised learning techniques excel at making predictions based on historical data, which is invaluable in fields like finance for stock price forecasting.

- Robustness: Many supervised learning models, such as ensemble methods, enhance robustness by combining multiple algorithms to improve performance.

These advantages underscore the significance of supervised learning in driving data-driven decisions across various industries.

Advantages of Unsupervised Learning

Unsupervised learning shines in its ability to discover hidden patterns and insights within data that lacks labels. This method is particularly valuable in various fields where understanding the underlying structure of data is crucial. Here are some key advantages:

- Pattern Recognition: Unsupervised learning can identify natural groupings and relationships in data. For instance, in customer segmentation, businesses can categorize customers based on purchasing behavior without prior knowledge of the segments.

- Data Exploration: It allows for the exploration of datasets to uncover trends and anomalies. This exploratory analysis can lead to insights that might not be evident through labeled data alone.

- No Need for Labeled Data: Since unsupervised learning does not require labeled data, it is easier and often cheaper to implement. This is especially beneficial in situations where labeling data is impractical or costly.

- Flexibility: The techniques used in unsupervised learning, such as clustering and dimensionality reduction, can be applied across various domains, making it a versatile tool for data analysis.

In summary, unsupervised learning is essential for extracting valuable insights from unlabeled datasets, enhancing decision-making processes across multiple industries.

Limitations of Supervised Learning

While supervised learning is a powerful approach, it comes with several limitations that practitioners must consider. Here are some notable challenges:

- Dependence on Labeled Data: Supervised learning requires a significant amount of labeled data, which can be time-consuming and expensive to obtain. In many cases, acquiring high-quality labels is a bottleneck in the modeling process.

- Overfitting: There is a risk that models trained on labeled data may become too tailored to the training set, failing to generalize well to new, unseen data. This phenomenon, known as overfitting, can lead to poor performance in real-world applications.

- Bias in Data: If the labeled data is biased, the model will inherit those biases, potentially leading to unfair or inaccurate predictions. Ensuring diversity and representativeness in the training data is essential for model fairness.

- Limited Scope: Supervised learning focuses on specific outcomes, which can limit the exploration of broader patterns and relationships in the data.

Thus, understanding these limitations is crucial for effectively applying supervised learning techniques and ensuring robust and equitable outcomes.

Challenges of Unsupervised Learning

Unsupervised learning also faces its own set of challenges that users must navigate. Here are key limitations:

- Difficulty in Evaluation: Unlike supervised learning, where accuracy can be measured against known labels, evaluating the performance of unsupervised models can be challenging. The lack of predefined outcomes makes it hard to determine how well a model is performing.

- Interpretation of Results: The insights generated from unsupervised learning can be complex and difficult to interpret. Users may struggle to understand the significance of the patterns identified, which can hinder decision-making.

- Potential for Misleading Insights: Algorithms may find patterns that are not meaningful or relevant. This risk requires careful validation and analysis of the results to avoid drawing incorrect conclusions.

- Scalability Issues: Some unsupervised techniques may struggle with large datasets, leading to increased computational costs and time.

Recognizing these challenges is vital for effectively leveraging unsupervised learning in data analysis.

Labeled Data in Supervised Learning

Labeled data is the cornerstone of supervised learning. It serves as the foundation upon which models learn to make predictions. Here’s how labeled data is used:

- Training Phase: During the training phase, the algorithm is exposed to labeled examples, allowing it to learn the relationship between input features and their corresponding outputs. Each training example consists of an input and the correct output.

- Model Evaluation: Labeled data is also essential for evaluating model performance. By comparing predicted outcomes against true labels, users can assess the accuracy and effectiveness of the model.

- Feedback Mechanism: The presence of labeled data enables a feedback loop, where models can be refined and improved based on their performance on the labeled dataset.

- Real-World Applications: Labeled data is critical in applications such as medical diagnosis, where the consequences of predictions can be significant.

In essence, labeled data is indispensable for training and validating supervised learning models, ensuring they perform effectively in real-world scenarios.

Unlabeled Data in Unsupervised Learning

Unlabeled data plays a central role in unsupervised learning, allowing algorithms to explore and analyze datasets without predefined labels. Here’s how unlabeled data is utilized:

- Pattern Discovery: Unsupervised learning algorithms analyze unlabeled data to uncover hidden structures and relationships. This can lead to valuable insights, such as identifying customer segments or detecting anomalies.

- Feature Extraction: Techniques like dimensionality reduction can be applied to unlabeled data to simplify datasets while retaining essential information, making it easier to visualize and analyze.

- Data Preprocessing: Unlabeled data can be used in preprocessing steps to inform supervised learning models. For example, clustering can help in labeling data for supervised training.

- Exploratory Analysis: Unlabeled data allows for exploratory analysis, helping researchers and analysts generate hypotheses and identify areas for further investigation.

Thus, unlabeled data is crucial for uncovering insights and enhancing the understanding of datasets, paving the way for informed decision-making.